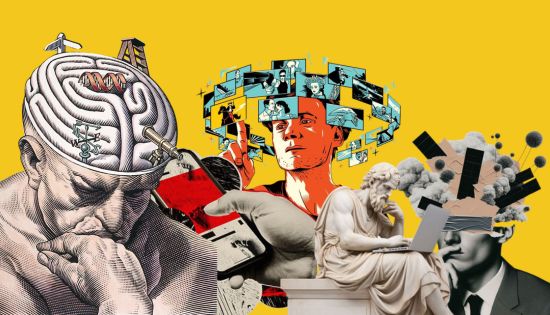

Generative AI And Data Protection: What Are The Biggest Risks For Employers?

If you’re an employer tempted to experiment with generative AI tools like ChatGPT, there are certain data protection pitfalls that you’ll need to consider. With an increase in privacy and data protection legislation in recent years – in the US, Europe and around the world – you can’t simply feed human resources data into a generative AI tool. After all, personnel data is often highly sensitive, including performance data, financial information, and even health data.

Obviously, this is an area where employers should seek proper legal guidance. It’s also a good idea to consult with an AI expert on the ethics of using generative AI (so you’re not just acting within the law but also acting ethically and transparently). But as a starting point, here are two of the main considerations that employers should be aware of.

Feeding Personal Data Into Generative AI Systems

As I’ve said, employee data is often highly sensitive and personal. It’s precisely the kind of data that is, depending on your jurisdiction, typically subject to the highest forms of legal protection.

And this means it’s extremely risky to feed that data into a generative AI tool. Why? Because many generative AI tools use the information given to them for fine-tuning the underlying language model. In other words, it could use the information you feed into it for training purposes – and could potentially disclose that information to other users in the future. So, let’s say you use a generative AI tool to create a report on employee compensation based on internal employee data. That data could potentially be used by the AI tool to generate responses to other users (outside of your organization) in the future. Personal data could, quite easily, be absorbed into the generative AI tool and reused.

This isn’t as underhand as it sounds. Delve into the terms and conditions of many generative AI tools, and they’ll clearly state that data submitted to the AI could be used for training and fine-tuning or disclosed when users ask to see examples of questions previously submitted. Therefore, a first port of call is to always understand exactly what you’re signing up for when you agree to the terms of use.

As a basic protection, I would recommend that any data submitted to a generative AI service should be anonymized and stripped of any personally identifiable data. This is also known as “deidentifying” the data.

Risks Related To Generative AI Outputs

It’s not just about the data you feed into a generative AI system; there are also risks associated with the output or content created by generative AIs. In particular, there’s the risk that output from generative AI tools may be based on personal data that was collected and processed in violation of data protection laws.

As an example, let’s say you ask a generative AI tool to generate a report on typical IT salaries for your local area. There’s a risk that the tool could scrape personal data from the internet – without consent, in violation of data protection laws – and then serve that information up to you. Employers who use any personal data offered up by a generative AI tool could potentially bear some liability for the data protection violation. It’s a legal gray area for now, and most likely, the generative AI provider would bear most or all of the responsibility, but the risk is there.

Cases like this are already emerging. Indeed, one lawsuit has claimed that ChatGPT was trained on “massive amounts of personal data,” including medical records and information about children, collected without consent. You don’t want your organization to get inadvertently wrapped up in a lawsuit like this. Basically, we’re talking about an “inherited” risk of breaching data protection laws. But it’s a risk nonetheless.

In some cases, data that is publicly available on the internet doesn’t qualify as collection of personal data because the data is already out there. However, this varies across jurisdictions, so be aware of the nuances of your jurisdiction. Also, do your due diligence on any generative AI tools that you’re thinking of using. Look at how they collect data and, wherever possible, negotiate a service agreement that reduces your inherited risk. For example, your agreement could include assurances that the generative AI provider complies with data protection laws when collecting and processing personal data.

The Way Forward

It’s vital employers consider the data protection and privacy implications of using generative AI and seek expert advice. But don’t let that put you off using generative AI altogether. Used carefully and within the confines of the law, generative AI can be an incredibly valuable tool for employers.

It’s also worth noting that new tools are being developed that take data privacy into account. One example comes from Harvard, which has developed an AI sandbox tool that enables users to harness certain large language models, including GPT-4, without giving away their data. Prompts and data entered by the user are only viewable to that individual, and cannot be used to train the models. Elsewhere, organizations are creating their own proprietary versions of tools like ChatGPT that do not share data outside of the organization.

Follow Me on LinkedIn – Chameera De Silva

Related News

Wuthering Heights: Book vs Movie – Which Resonates More?

Read • Watch • Learn When Emily Brontë published Wuthering Heights in 1847, few expected the novel to become one of the…

Read MoreWycherley International School Dehiwala Hosts Joyful Kiddies Fiesta 2026 Celebration

Wycherley International School Dehiwala welcomed students, parents and teachers to a spirited celebration of childhood at its Kiddies Fiesta 2026 on 11…

Read MoreBusiness, BUT NOT AS USUAL – Southern Cross University Brings Bachelor of Business to Sri Lankan Students at NSBM Green University

SCU Sri Lanka National Launch | 19–21 February 2026 | NSBM Green University, Homagama Southern Cross University (SCU), one of Australia’s leading…

Read MoreBelarusian State Medical University:A Trusted Pathway to Studying Medicine Abroad with ISC Education

For many Sri Lankan students, gaining entry to a local medical faculty remains highly competitive despite strong academic performance. As a result,…

Read MoreAustralian Education Roadshow 2026 Set for February 20 and 22

Students aspiring to pursue higher education in Australia will have a unique opportunity this February as Jeewa Education hosts the Australian Education…

Read MoreCourses

-

The future of higher education tech: why industry needs purpose-built solutions

For years, Institutions and education agencies have been forced to rely on a patchwork of horizontal SaaS solutions – general tools that… -

MBA in Project Management & Artificial Intelligence – Oxford College of Business

In an era defined by rapid technological change, organizations increasingly demand leaders who not only understand traditional project management, but can also… -

Scholarships for 2025 Postgraduate Diploma in Education for SLEAS and SLTES Officers

The Ministry of Education, Higher Education and Vocational Education has announced the granting of full scholarships for the one-year weekend Postgraduate Diploma… -

Shape Your Future with a BSc in Business Management (HRM) at Horizon Campus

Human Resource Management is more than a career. It’s about growing people, building organizational culture, and leading with purpose. Every impactful journey… -

ESOFT UNI Signs MoU with Box Gill Institute, Australia

ESOFt UNI recently hosted a formal Memorandum of Understanding (MoU) signing ceremony with Box Hill Institute, Australia, signaling a significant step in… -

Ace Your University Interview in Sri Lanka: A Guide with Examples

Getting into a Sri Lankan sate or non-state university is not just about the scores. For some universities' programmes, your personality, communication… -

MCW Global Young Leaders Fellowship 2026

MCW Global (Miracle Corners of the World) runs a Young Leaders Fellowship, a year-long leadership program for young people (18–26) around the… -

Enhance Your Arabic Skills with the Intermediate Language Course at BCIS

BCIS invites learners to join its Intermediate Arabic Language Course this November and further develop both linguistic skills and cultural understanding. Designed… -

Achieve Your American Dream : NCHS Spring Intake Webinar

NCHS is paving the way for Sri Lankan students to achieve their American Dream. As Sri Lanka’s leading pathway provider to the… -

National Diploma in Teaching course : Notice

A Gazette notice has been released recently, concerning the enrollment of aspiring teachers into National Colleges of Education for the three-year pre-service… -

IMC Education Features Largest Student Recruitment for QIU’s October 2025 Intake

Quest International University (QIU), Malaysia recently hosted a pre-departure briefing and high tea at the Shangri-La Hotel in Colombo for its incoming… -

Global University Employability Ranking according to Times Higher Education

Attending college or university offers more than just career preparation, though selecting the right school and program can significantly enhance your job… -

Diploma in Occupational Safety & Health (DOSH) – CIPM

The Chartered Institute of Personnel Management (CIPM) is proud to announce the launch of its Diploma in Occupational Safety & Health (DOSH),… -

Small Grant Scheme for Australia Awards Alumni Sri Lanka

Australia Awards alumni are warmly invited to apply for a grant up to AUD 5,000 to support an innovative project that aim… -

PIM Launches Special Programme for Newly Promoted SriLankan Airlines Managers

The Postgraduate Institute of Management (PIM) has launched a dedicated Newly Promoted Manager Programme designed to strengthen the leadership and management capabilities…

Newswire

-

ICC T20 World Cup: Super 8 Points Table

ON: February 25, 2026 -

Police seek public assistance to arrest suspect over Kalutara North shooting

ON: February 25, 2026 -

Central Bank declares 24 companies and Apps as prohibited Pyramid Schemes

ON: February 25, 2026 -

Sri Lanka, Portugal to form committee on repatriation of colonial-era artefacts

ON: February 25, 2026 -

CEB Trade Unions to launch 06-hour token strike tomorrow

ON: February 25, 2026