AI in the Classroom: Is ChatGPT Helping or Hurting Student Thinking?

In lecture halls, libraries, and late night study sessions, one tool has become nearly as common as notebooks and laptops: ChatGPT. From summarizing readings to drafting full essays, students are increasingly turning to the AI-powered chatbot for academic support. But as its popularity grows, so do concerns about what it might be taking away namely, students’ critical thinking skills.

“It Did the Job But I Didn’t Learn Anything”

Samantha, a third-year commerce student, recalls using ChatGPT to help her finish a reflective essay due in less than two hours. “I was behind on work and tired,” she said. “I asked it to write a paragraph about business ethics. It sounded perfect, so I pasted it straight in.”

She submitted the paper, received a decent grade, but now admits: “If someone asked me to explain what I wrote, I probably couldn’t have. It did the job, but I didn’t really learn anything.”

Stories like Samantha’s are becoming more common and they’re raising red flags among educators.

Fast Answers, Slow Thinking?

At the heart of the issue is the growing reliance on AI to do the intellectual heavy lifting. Teachers and academic staff report seeing more formulaic assignments, fewer analytical insights, and a decline in student engagement during discussions.

“Students who use AI for outlines and ideas might start out with good intentions,” said Dr. Annika Rao, a senior lecturer in education. “But the temptation to let it take over completely is very real. That short-circuits the critical thinking process.”

She describes one assignment where students were asked to debate whether surveillance improves safety or compromises privacy. “Several essays were grammatically perfect but felt generic and lacked any real depth. When questioned, a few students admitted ChatGPT had ‘helped a lot.’ Too much, in some cases.”

What Is Being Lost?

Critical thinking isn’t about knowing the right answer it’s about understanding why it’s right (or wrong). It’s the ability to ask good questions, challenge assumptions, connect ideas, and build a reasoned argument.

When students rely on AI-generated responses, they may miss out on:

- Making decisions amid ambiguity

AI often provides polished responses without showing the steps it took. Students don’t experience the discomfort and growth that comes from wrestling with uncertain answers. - Learning from mistakes

Errors are part of learning. But with ChatGPT offering near-perfect outputs, students may avoid the trial-and-error process that deepens understanding. - Ownership of ideas

There’s a subtle but powerful shift when students stop feeling like authors of their own work. This affects confidence, creativity, and academic integrity.

When It Helps and When It Hurts

Not all uses of ChatGPT are problematic. Some students use it to clarify concepts, brainstorm essay structures, or reword sentences to improve clarity. In these cases, it can function like a tutor or writing assistant.

For example, Ravi, a medical student, uses the chatbot to quiz himself on anatomy. “I don’t copy answers,” he says. “I use it to test my recall and then cross-check with textbooks. It’s like having an instant question bank.”

Educators agree that this kind of active use where the student remains in control can complement learning. The trouble begins when the AI moves from assistant to author.

Rethinking Assessments

In response, some universities are adjusting how they evaluate students. Traditional take-home essays are being replaced with in-class writing tasks, oral exams, and presentations. These methods make it harder to outsource thinking and easier to spot authentic understanding.

Others are encouraging “process-based” assignments. Instead of grading only the final essay, instructors ask for drafts, annotated bibliographies, or reflective journals showing how the student arrived at their conclusions.

Building AI Literacy

Rather than banning tools like ChatGPT, educators are increasingly advocating for responsible use. That means teaching students how to evaluate, question, and even challenge the information AI provides.

“Students need to learn how to work with AI not against it, but not under it either,” said Dr. Rao. “We must teach them to be critical users, not passive consumers.”

This might involve assignments that require students to compare AI responses with human-written sources, or ask students to critique a ChatGPT-generated argument.

The Bottom Line

ChatGPT is not going away. Its speed, fluency, and accessibility make it an appealing tool in the academic world. But if left unchecked, it could quietly weaken the very skills that education aims to build.

The solution isn’t to fear AI but to frame its use within a deeper culture of thoughtfulness and integrity. In a world of instant answers, the real value lies in learning how to think, not just what to write.

Related News

D. S. Senanayake College Marks 59 Years, Reaffirms ‘Country Before Self’ Legacy

"Country Before Self", an immortal slogan entrenched in the heartbeats of every Senanayakian, stands as the guiding principle of D. S. Senanayake…

Read MorePaper Plane Lands in Galle: A Mini Lit Fest with Nifraz Rifaz

Galle Fort is set to welcome a unique literary experience this weekend as Paper Plane Lands in Galle, a two-day mini literature…

Read MoreGateway Expands Fleet to Further Strengthen Its Rowing Programme

Gateway Rowing marked a historic milestone with the acquisition of two state-of-the-art Falcon Racing boats: a quad-four convertible, Dreadnought, and a single…

Read MoreCISD Launches to Transform Sales Education and Build Sri Lanka’s Next Generation of Sales Professionals

Sri Lanka has long produced talented, resilient, and hardworking sales professionals across every major industry. Yet for decades, the sales function—despite being…

Read MoreRead • Watch • Learn

Stories often teach us more than textbooks ever could. This EduWire series explores books, films and series as spaces of learning –…

Read MoreCourses

-

The future of higher education tech: why industry needs purpose-built solutions

For years, Institutions and education agencies have been forced to rely on a patchwork of horizontal SaaS solutions – general tools that… -

MBA in Project Management & Artificial Intelligence – Oxford College of Business

In an era defined by rapid technological change, organizations increasingly demand leaders who not only understand traditional project management, but can also… -

Scholarships for 2025 Postgraduate Diploma in Education for SLEAS and SLTES Officers

The Ministry of Education, Higher Education and Vocational Education has announced the granting of full scholarships for the one-year weekend Postgraduate Diploma… -

Shape Your Future with a BSc in Business Management (HRM) at Horizon Campus

Human Resource Management is more than a career. It’s about growing people, building organizational culture, and leading with purpose. Every impactful journey… -

ESOFT UNI Signs MoU with Box Gill Institute, Australia

ESOFt UNI recently hosted a formal Memorandum of Understanding (MoU) signing ceremony with Box Hill Institute, Australia, signaling a significant step in… -

Ace Your University Interview in Sri Lanka: A Guide with Examples

Getting into a Sri Lankan sate or non-state university is not just about the scores. For some universities' programmes, your personality, communication… -

MCW Global Young Leaders Fellowship 2026

MCW Global (Miracle Corners of the World) runs a Young Leaders Fellowship, a year-long leadership program for young people (18–26) around the… -

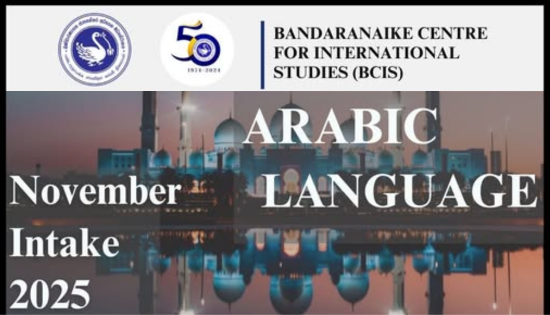

Enhance Your Arabic Skills with the Intermediate Language Course at BCIS

BCIS invites learners to join its Intermediate Arabic Language Course this November and further develop both linguistic skills and cultural understanding. Designed… -

Achieve Your American Dream : NCHS Spring Intake Webinar

NCHS is paving the way for Sri Lankan students to achieve their American Dream. As Sri Lanka’s leading pathway provider to the… -

National Diploma in Teaching course : Notice

A Gazette notice has been released recently, concerning the enrollment of aspiring teachers into National Colleges of Education for the three-year pre-service… -

IMC Education Features Largest Student Recruitment for QIU’s October 2025 Intake

Quest International University (QIU), Malaysia recently hosted a pre-departure briefing and high tea at the Shangri-La Hotel in Colombo for its incoming… -

Global University Employability Ranking according to Times Higher Education

Attending college or university offers more than just career preparation, though selecting the right school and program can significantly enhance your job… -

Diploma in Occupational Safety & Health (DOSH) – CIPM

The Chartered Institute of Personnel Management (CIPM) is proud to announce the launch of its Diploma in Occupational Safety & Health (DOSH),… -

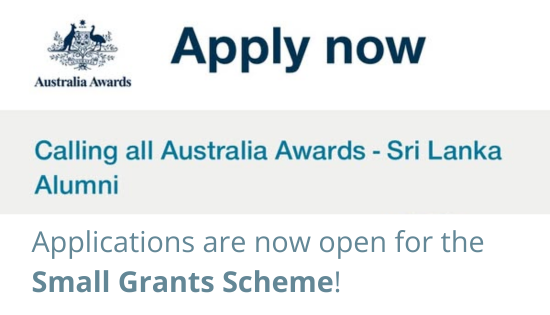

Small Grant Scheme for Australia Awards Alumni Sri Lanka

Australia Awards alumni are warmly invited to apply for a grant up to AUD 5,000 to support an innovative project that aim… -

PIM Launches Special Programme for Newly Promoted SriLankan Airlines Managers

The Postgraduate Institute of Management (PIM) has launched a dedicated Newly Promoted Manager Programme designed to strengthen the leadership and management capabilities…

Newswire

-

Sri Lanka signs landmark agreement to increase plantation wages

ON: February 1, 2026 -

Two Arrested with Over 5 Kilograms of Heroin in Bentota

ON: February 1, 2026 -

14 Behind Bars After Fake CID Officers Heist Temple Statue

ON: February 1, 2026 -

Weather today: Rain expected in several areas

ON: February 1, 2026 -

Foundation stone laid for ‘FIFA Arena’ mini pitch in Negombo

ON: February 1, 2026